Modernizing TANF data reporting

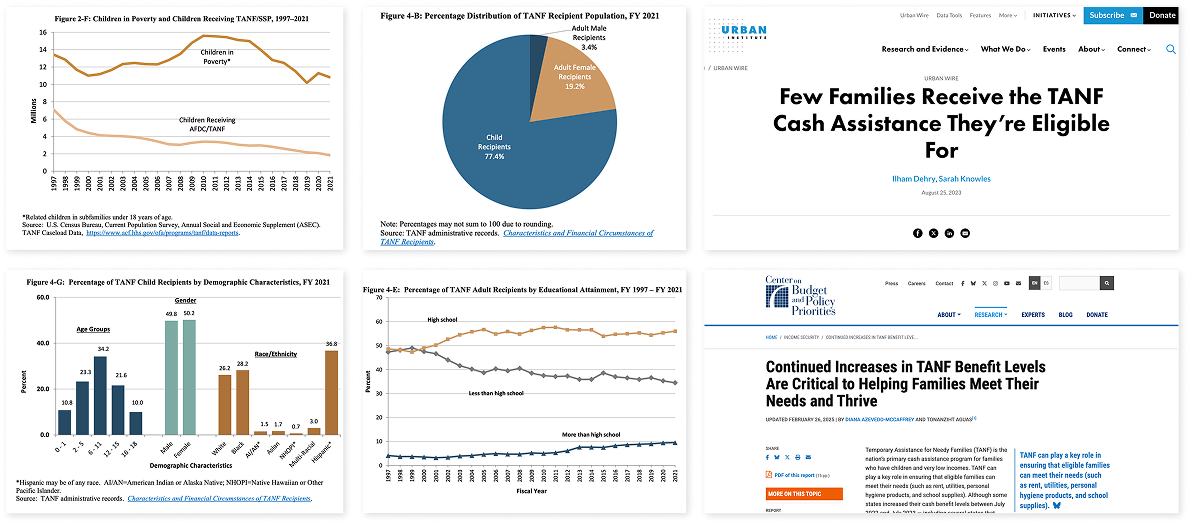

Every month, 800,000 families receive direct cash assistance through Temporary Assistance for Needy Families (TANF), a $16.5 billion federal block grant administered by state, territorial, and tribal governments. These grantees submit data quarterly via the TANF Data Reporting System (TDRS) to track national poverty trends and inform policy and programs serving low-income Americans.

I led foundational user research and prototyping to define the acquisition and scope of a new TANF Data Reporting System. My work included identifying key interaction and data needs for the new system, advising on an acquisition strategy to secure an agile, user-centered team, and coaching partners on iterative design practices that reduce the risk of IT project failure in government.

Outcomes:

- OFA updated its 20+ year old reporting system to a more secure, user-centered platform now used by over 85% of grantees.

- I trained 300+ federal contracting officers on market research and iterative design practices for federal IT projects.

- The 18F team hired and onboarded a cross-functional, agile design team within 4 months of RFP posting, significantly faster than most procurements.

Upgrading a 20+ year old data reporting system

Each quarter, 50 states, 75 tribes, 3 territories, and D.C. report the work activities and characteristics of families receiving direct cash assistance through the TANF Data Reporting System (TDRS). This decades-long dataset is used to analyze longitudinal poverty trends in America, identify patterns of who needs and receives benefits, and to inform policy discussions in and out of government.

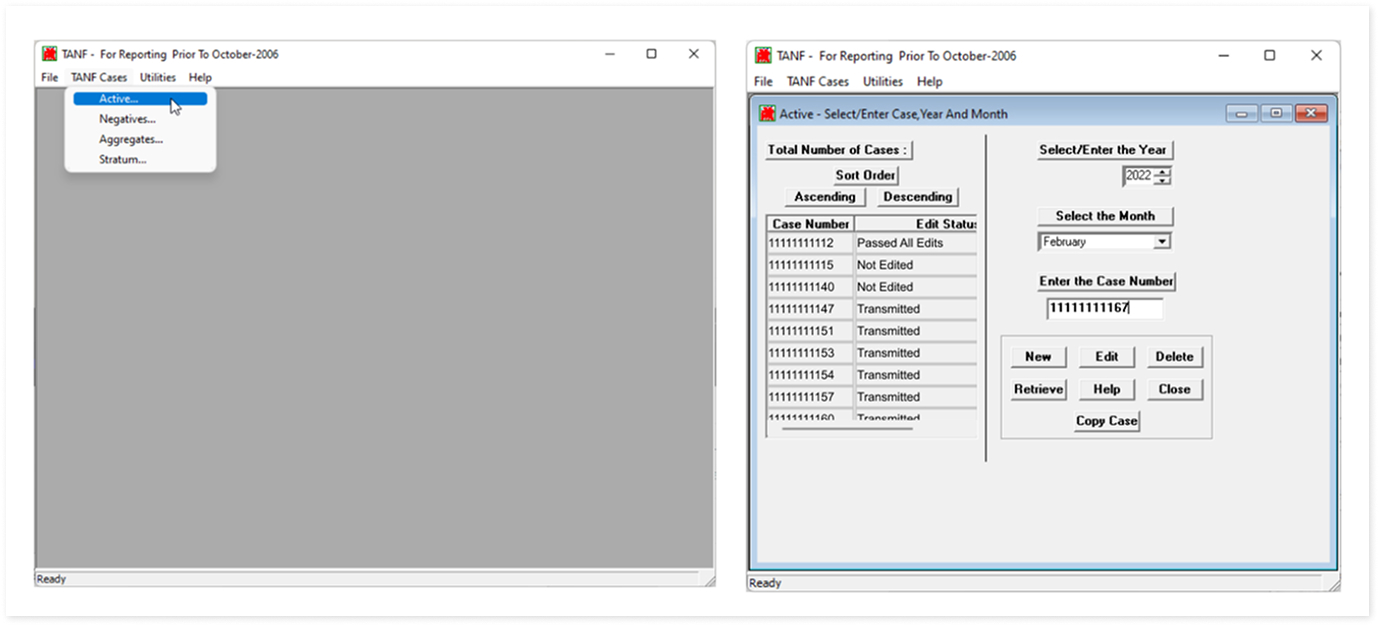

But the technology behind this data was difficult to use and maintain. The legacy system was:

- Inflexible: The system was difficult to adjust to changing legislative and policy requirements.

- Lacking automation and validation: There were no automated workflows or data validation, which resulted in grantees performing a significant amount of manual work to prepare, load, and correct data files

- A black box experience: Grantees did not have a user interface or in app guidance to support them as they prepared and submitted data files.

- Lacked data ownership: The system did not provide OFA with full ownership of the inputted data.

Both federal staff managing the data and state, local, and tribal grantees providing the data faced painful user experiences that often led to untimely or incomplete data.

18F partnered with OFA to prototype and procure a new data reporting system that would upgrade the data reporting experience and ultimately improve data quality used to make policy and program decisions affecting low-income families.

Leading research to define core needs, tasks, and audiences for a modern reporting system

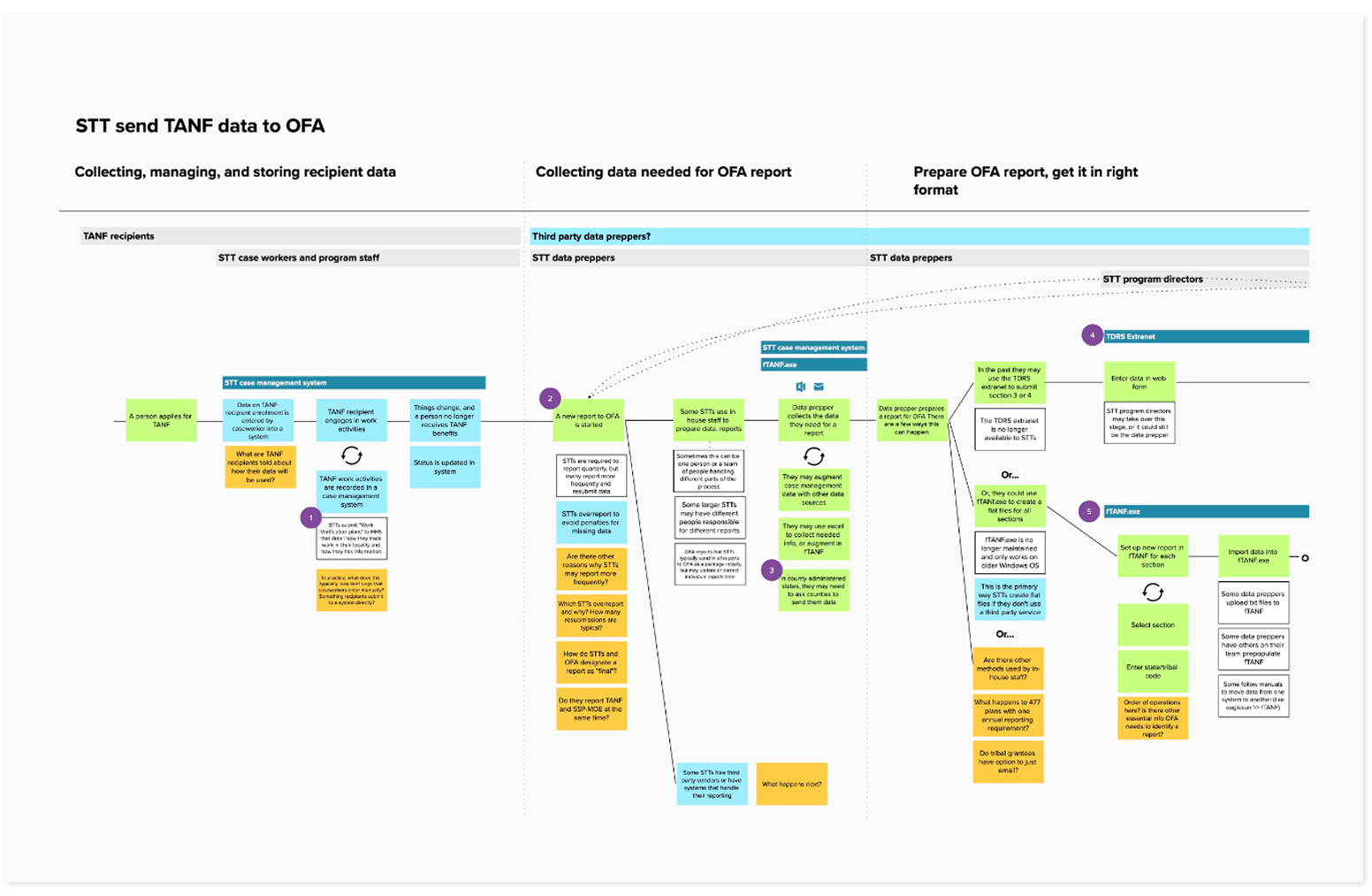

Mapping the "last mile" of reporting

We needed to define what a new system needed to solve for and integrate with before bringing on a build team. I worked with the OFA product owner to prioritize research questions and develop a research plan to answer these key questions:

- Map how data travels from state, tribal, and territory systems to OFA systems.

- Document the tools used to prepare and format data files, including anything working well for grantees

- Identify how data preparation vary depending on resourcing and reporting situations (large state caseloads vs small tribal caseloads, county-level administration)

- Understand existing communication patterns between grantees, the system, and OFA analysts on submission status and data errors.

I interviewed multiple OFA analysts and six grantees (5 states, 1 tribe) with diverse resources and reporting constraints. Based on the findings, I created a service blueprint that mapped existing experiences and data flows which I then presented to the team and product owner. We used this service blueprint to identify areas of focus for the modernization roadmap and develop user stories and requirements for the Request for Proposals.

Modeling collaborative research

One of my goals was to bring OFA into the research process so that they could see actual grantee experiences and also observe what user research looks like in practice. I did this by:

- Creating opportunities for OFA staff to observe research sessions (with participant consent) and debriefs.

- Inviting OFA and HHS staff to synthesize anonymized notes from research and identify themes as a team.

- Facilitating a design studio with OFA analysts closest to the TDRS to target their vision for the future and inform lightweight, clickable prototypes for a new upload interface.

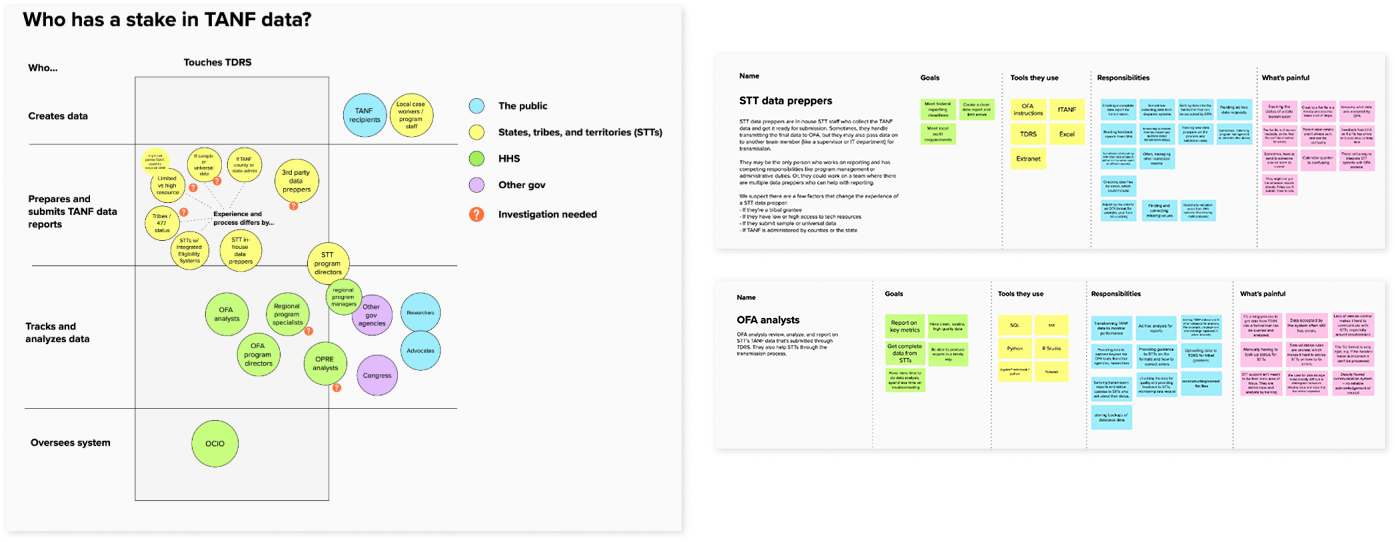

Audience segmentation and needs analysis

From research synthesis and service mapping, I worked with the OFA team to map key TDRS audiences and their needs for an updated reporting system. This analysis became the basis for user stories and scoping decisions in the RFP.

Integrating user needs into acquisition decisions and product roadmap

The expectations set in the contract are essential to the success of the project. The contract we designed not only clarified requirements, but also modeled the agile, iterative, user-centered practices we wanted vendors to provide that lower the risk of failure in government IT projects.

To emphasize the need for continuous user research, I worked with the team to solicit research plans and findings from the vendor community and set that as a requirement for submitting a proposal. Our research findings became documentation on core audiences and refreshed user stories.

Once proposals came in, I advised on vendor selection and provided expert review of research plans, design staffing proposals, and vendor responses in interviews. We ultimately hired an agile software vendor and design team within 4 months of joining the process, far faster than typical government awards.

Scaling user-centered practices for TDRS, and beyond

After selection, I designed a vendor kickoff schedule where I led sessions outlining research findings and current design practices to the new team. I worked closely with our OFA product owner to ensure design work was regularly tested and accessible, and paired frequently with vendor designers to ensure a smooth, successful handoff.

I spread awareness of our user-centered procurement approach by co-leading a training for 300+ federal contracting officers on incorporating market research and iterative design practices in federal IT projects. The training discussed user-centered practices and specific tactics contracting officers could use to improve outcomes, and has been reused multiple times.

I also worked with my 18F teammates to create a heuristics toolkit for supporting product owners and vendor onboarding schedule to support future teams at 18F and beyond. I worked with the 18F product manager and OFA partner to give a presentation on OFA's experience incorporating user research, modular procurement and agile development on their team.

"180 degrees from our past experience"

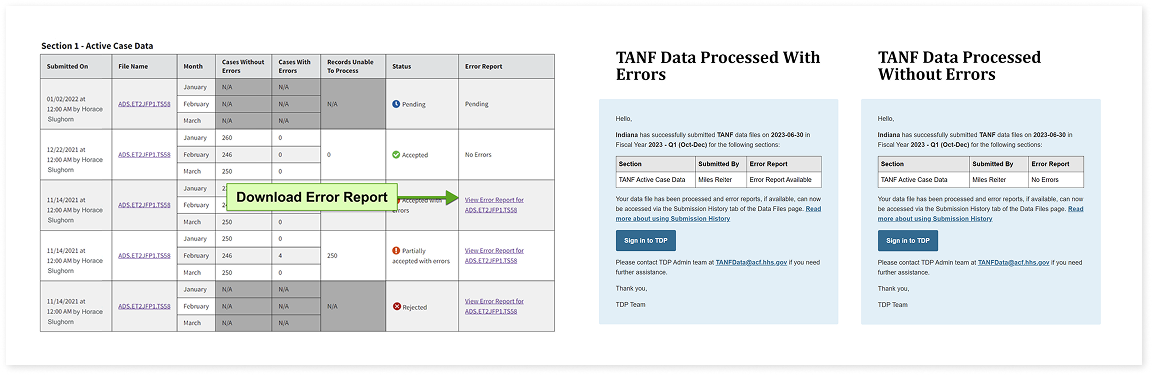

OFA has updated its 20+ year old reporting system to a more secure, user-centered platform now used by over 85% of grantees. The new system reflects many of the needs identified in early research, including submission history, clearer email notifications, and error reporting.

Our product owner described the 18F team's work a few years after the project:

"Designing the contract and the system the way we did — for OFA to have control and input and be so involved in the development — I think it facilitates the ability to be responsive. It’s just 180 degrees from our past experience. It's so refreshing." - HHS Product Owner